Reflections on AI

After having all kinds of thoughts about AI, particularly after reading Nick Bostrom’s Superintellegence a few months ago, I stumbled on Aeon’s article Raising Good Robots by Regina Rini.1 The article made me reflect on my beliefs on AI.

I think that an AI more intelligent than a human in every single domain — will eventually become a reality if the current technologic trends and socio-economic stability continues. Even if you assume any rate of progression, AI is no longer a question of if, it becomes a question of when. I don’t think anyone today can give an answer to the question of when an AI breakthrough will occur, but I think it is reasonable to say that it will eventually occur, rather than not occur. Especially under the current pace of technologic advancement.

Now, this is the basis of my belief which I think is within these schools of beliefs on AI:2

Singularitarianism — People who believe in the invention of artificial superintelligence that will trigger runaway technological growth, resulting in unfathomable changes to human civilization.3

AItheism (AI-atheism) — Disbelievers, who don’t think that an artificial superintelligencee is possible.

Now, I can consider myself to be closer to the singularitarianist group. While there are plenty of reasons and arguments out there arguing for this view, I have come up with my own which attempts to illustrate why AI lies within the human future.

Natural Evolution

My reason comes from a rule that allowed life to proliferate on Earth 3 billions ago in the first place. The very fact that a sentient, comprehending, and relatively intelligent living being can read and comprehend these words (assuming your are not robot), who 3 billion years ago was nothing but a part of the Earth’s primeval ocean — is something that I think slips past many minds when it comes to thinking about the possibility of creating non-biologic intelligence.

Natural Evolution proves that intelligent life can spawn from a primeval soup of amino acids and molecules. It took more than 3 billion years for single celled organisms to evolve into Homo Sapiens, whose brain is the most complicated thing known to science (apart from only black holes and quantum theory, perhaps). The human brain is made out of small constituents; cells, molecules, which science can explain on an individual level, yet, it struggles to understand and formulate what happens when all these parts start functioning together — the enigma puzzle of the brain.

Life as we know it, is something that has arisen out of relatively simple constituents once it was given some time. I don’t see a compelling reason to not believe why something intricate and complex like intelligence, will not eventually emerge out of non-biological parts. The marvel of natural selection is that is able to create sentient life out of biologic material — through the span of billions of trial and error cycles. As long as there is some thing that can learn from trail and error, take advantage of iterative design, and improve; intelligence does not have to emerge just out of biology. And according to Daniel Dennett, intelligence does not necessarily have to have comprehension, and potentially, not necessarily have a conscious of itself.

AI Evolution

The same iterative technique that allowed life to emerge, is being actively used to train computers. All successes in AI are a result of “training” where the AI goes through millions of iterations before its algorithms (also known as CNN’s Convolutional Neural Networks) become good at something. For biology, these iterations have taken millions of years; for a computer, it takes a few days. Humans already discovered very promising ingredients to non-biologic artificial intelligence, it is just a matter of time before the recipe is found.

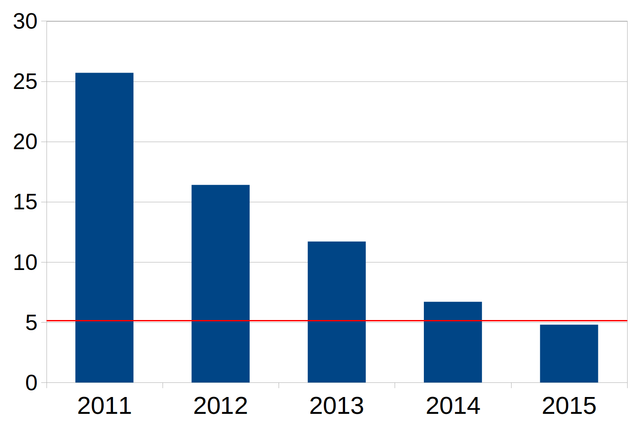

Today, an AI algorithm can be trained in a matter of months, or even days, which is unlike the 3 billion years it took for life to evolve into humans. Already such algorithms are better than humans in certain areas such as IBM’s speech-recognition AI, which achieved a 5.5% error rate in 2017 — very close to the error rate that humans make when understanding speech which is estimated to be at around a 5.1% error rate.4 Google in 2016 attained astounding results in AI research after its trained AI achieved a 6.1% error rate when describing images in words.5

The error rate of machine classification of images such as the one researched by Google. Red line — is the error rate of a human, which Google’s algorithms have surpassed and are already being applied in our daily digital lives.6

Of course, these AI’s are referred to as weak AI because they are better than humans at a very narrow domain. A general AI is something that is better than humans in many domains, if not all of them. This is an important distinction. We are still far from creating a general AI, but progress does prove that there are fewer reasons to think that it won’t eventually become a reality, especially seeing that AI is already proving itself to be better than humans in many narrow domains. I can see how narrow AI will eventually become better at 80% of human occupations including narrow but very crucial capacities such as understanding and speaking a language, synthesizing concepts, and forming a memory of past events. At that point, the step from weak AI to general AI will become a matter of configuration.

Hope that this was convincing enough to point out that AI won’t remain a matter of science fiction forever, and will eventually become a reality. It is only a question of when, and not a question of if. For a very interesting paper on the subject, check out Nick Bostrom’s How Long Before Superintelligence paper written in 1997.7

-

Raising good robots by Regina Rini. Published 18 April, 2017 ↩

-

Luciano Floridi in his essay Should we be afraid of AI? nicley categorizes the two “schools” of belief about AI proliferation into Singularitarianism and AItheism. ↩

-

Technological singularity from Wikipedia ↩

-

IBM achieves new record in speech recognition from IBM Research Blog ↩

-

Show and Tell: image captioning open sourced in TensorFlow ↩

-

Source Wikipedia - Classification of images progress human ↩

-

Nick Bostrom, How Long Before Superintelligence. 1997. Oxfrord Future of Humanity Institute ↩